Humberto Maturana, the Chilean biologist and philosopher, said, “We do not perceive the world we see. We see the world, we know how to perceive.” The same cannot be said about AI, and it is the absence of this quality that endangers academic integrity. AI systems negatively impact academic work because their present nature is distinct from living beings and so their use must be heavily regulated.

The ever-growing popularity of AI makes it clear that its presence in our lives is only increasing. Many support this development, while others argue that it is unfit and dangerous to use. Changes like these must be heavily reflected upon to avoid unintended consequences in the future. And they must be tackled based on fundamental assumptions and not their consequences or outcomes.

In 1971, Maturana and Francisco Varela, his student, formulated a new notion called autopoiesis, which, translated from Greek, means self-producing. An opposite term for it is allopoiesis, meaning created by another. Today, autopoiesis is an accepted definition of life, in all of its diversity, by the scientific community.

Bodily existence, a physical boundary separate from the environment, is essential in the processes of life.

The internal structure of the living being triggers a process of discrimination and selection of environmental elements, and in turn, the chosen environment fosters the emergence of the living organism. This mutual co-emergence of environment and living structure gives rise to the process of life, and simultaneously and equivalently to the process of cognition.

Autopoietic systems are also historical products, as they reflect the idea that “we see the world we know how to perceive.” Every living system, in its interaction with the environment (which includes other and distinct living systems), creates a unique reality, in the most literal sense of the word.

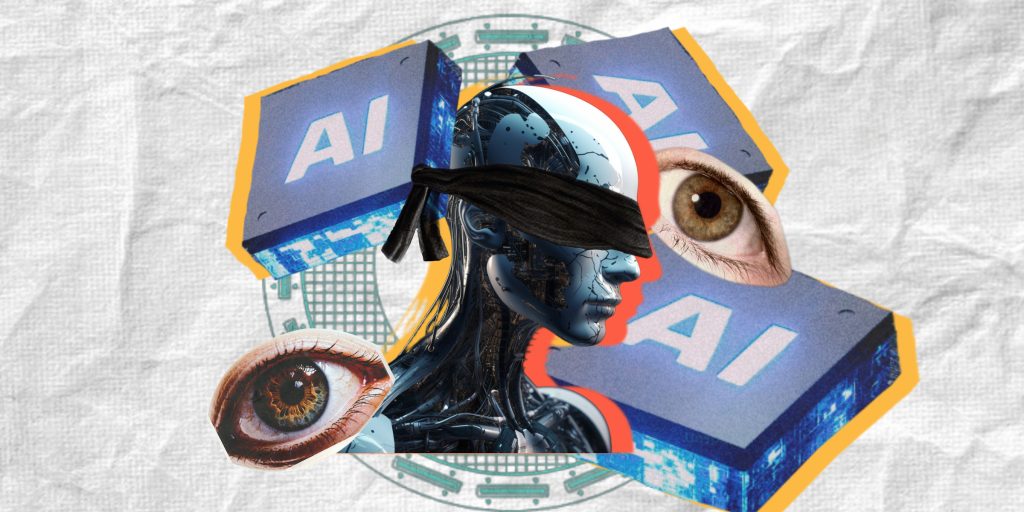

AI is an allopoietic system. It is void of a physical boundary that would separate it from its environment and facilitate interaction with it. It is this lack of physical constraints that results in its immorality, as described by Chomsky and others, such as the endorsement of ethical and unethical decisions alike or the inability of distinguishing the possible from the impossible.

AI also does not have a continuous history of interactions and experiences with its environment, which forbids it from shaping a distinct worldview upon which to base its decisions and interpretations. AI does not “live” and, as such, has formed neither cognition nor a unique reality. It does not know how to perceive the world.

This is why the use of AI is a threat to academic integrity at its core. By using it in our academic work, we infringe on the subjective experiences that have formed our identity. A quality imperative to facilitating cooperation between people is being able to put ourselves in someone else’s shoes.

That is to say, understand each other’s realities as well as the world we all share, the professional manifestation of which is our academic work. AI systems are not fit for engaging in such discourse, the purpose of which is to bring a unique point of view.

There is harm in having a conversation partner who is not only morally unconstrained but who also, according to Chomsky, “refuses to take a stand on anything [and] pleads … ignorance … [and] lack of intelligence.”

But AI is trained on data. Doesn’t that constitute past experiences on which to base its decisions? It does, which is why AI is not a camera or a microphone that merely records data. Experiments, where AI models were trained on malicious data that produced appropriately malicious results, are a testament to that fact.

While that is true, this is merely a static form of history. Once trained, the AI model remains such for eternity, unable to change ever again. A stray cat that grew up to fear humans because of being mistreated can still be domesticated because it never stops experiencing life. It continuously builds on its history and can develop in truly amazing and unexpected ways. In contrast, AI does not have this capability, for its history is static.

Once AI has had an opportunity to experience the world with visual and tactile sensors of its own, it has the potential to become a unique conversation partner, one humanity has never had.

But until that day arrives, it must be heavily constrained to specific fields of research where its computational and pattern-recognition abilities are a necessity.

History shows us that any new technology brings with it second-order effects. It also shows that steps must be taken to avoid those unintended consequences without sacrificing strengths.

Unless constrained, modern-day AI’s uninvolvement in the process of life, its apathy and ambiguity, will overstep our moral and ethical boundaries and violate the values and integrity of our academic work.